Currently, it seems that lots of things matter more than energy efficiency. Investments in reliability, capacity expansion and revenue protection all receive higher priority in data centers than any investment focusing on cutting operating expenses through greater efficiency.

So does this mean that efficiency really doesn’t matter? Of course efficiency matters. Lawrence Berkeley National Labs just issued a data center energy report proving just how much efficiency improvements have slowed the data center industry’s energy consumption; saving a projected 620 billion kWh between 2010 and 2020.

The investment priority disconnect occurs when people view efficiency from the too narrow perspective of cutting back.

Efficiency, in fact, has transformational power – when viewed through a different lens.

Productivity is an area ripe for improvements specifically enabled by IoT and automation. Automation’s impact on productivity often gets downplayed by employees who believe automation is the first step toward job reductions. And sure, this happens. Automation will replace some jobs. But if you have experienced and talented people working on tasks that could be automated, your operational productivity is suffering. Those employees can and should be repurposed for work that’s more valuable. And, as most datacenters run with very lean staffing, your employees are already working under enormous pressure to keep operations working perfectly and without downtime. Productivity matters here as well. Making sure your employees are working on the right, highest impact activities generates direct returns in cost, facility reliability and job satisfaction.

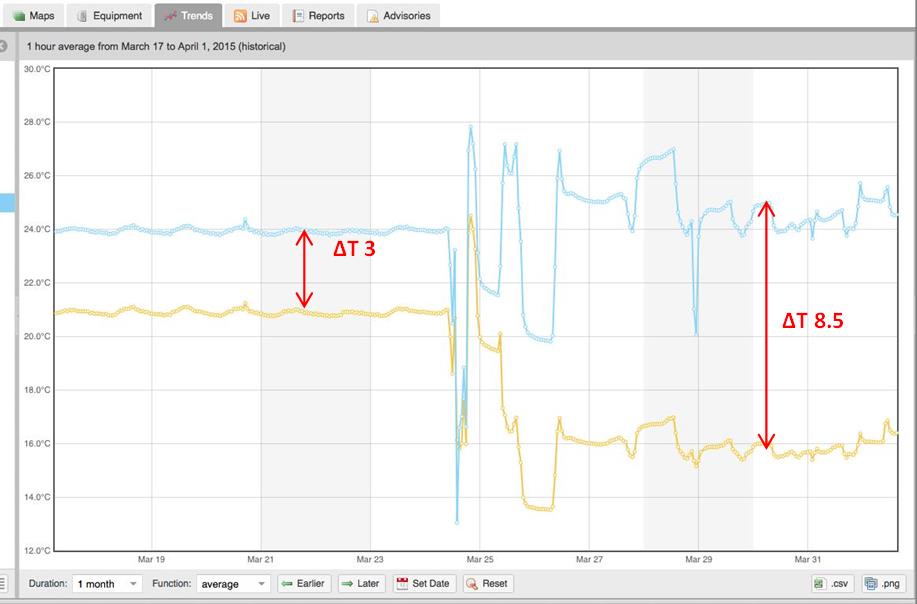

Outsourcing is another target. Outsourcing maintenance operations has become common practice. Yet how often are third party services monitored for efficiency? Viewing the before and after performance of a room or a piece of equipment following maintenance is telling. These details, in context with operational data, can identify where you are over-spending on maintenance contracts or where dollars can be allocated elsewhere for higher benefit.

And then there is time. Bain and Company in a 2014 Harvard Business Review article called time “your scarcest resource,” and as such is a logical target for efficiency improvement. Here’s an example. Quite often data center staff will automatically add cooling equipment to facilities to support new or additional IT load. A quick and deeper look into the right data often reveals that the facilities can handle the additional load immediately and without new equipment. A quick data dive can save months of procurement and deployment time, while simultaneously accelerating your time to the revenue generated by the additional IT load.

Every time employees can stop or reduce time spent on a low value activity, they can achieve results in a different area, faster. Conversely, every time you free up employee time for more creative or innovative endeavors, you have an opportunity to capture competitive advantage. According to a report by KPMG as cited by the Silicon Valley Beat, the tech sector is already focused on this concept, leveraging automation and machine learning for new revenue advantages as well as efficiency improvements.

Investments in efficiency when viewed through the lens of “cutting back” will continue to receive low priority. However, efficiency projects focusing on productivity or time to revenue will pay off with immediate top line effect. They will uncover ways to simultaneously increase return on capital, improve workforce productivity, and accelerate new sources of revenue. And that’s where you need to put your money.