More Cooling With Less $$

My last post took a look at the maintenance savings possible through more efficient data center/facility cooling management. You can gain further savings by increasing the capacity of your existing air handling/ air conditioning units. It is even possible to add IT load without requiring new air conditioners or at the least, deferring those purchases. Here’s how.

Data centers and buildings have naturally occurring air stratification. Many facilities deliver cool air from an under floor plenum. As the air heats and rises, cooling air is delivered low and moved about with low velocity. Because server racks sit on the floor, they sit in a colder area on average. The air conditioners however, draw from higher in the room – capturing the hot air from above and delivering it, once cooled down, to the under floor plenum. This vertical stratification creates an opportunity to deliver cooler air to servers and at the same time increase cooling capacity by drawing return air from higher in the room.

However, this isn’t easy to achieve. The problem is that uncoordinated or decentralized control of air conditioners often causes some of the units to deliver uncooled air into the under floor plenum. There, the mixing of cooled and uncooled air results in higher inlet air temperatures of servers, and ultimately lower return-air temperatures, which reduces the capacity of the cooling equipment.

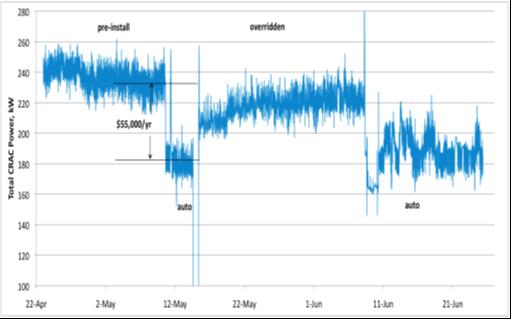

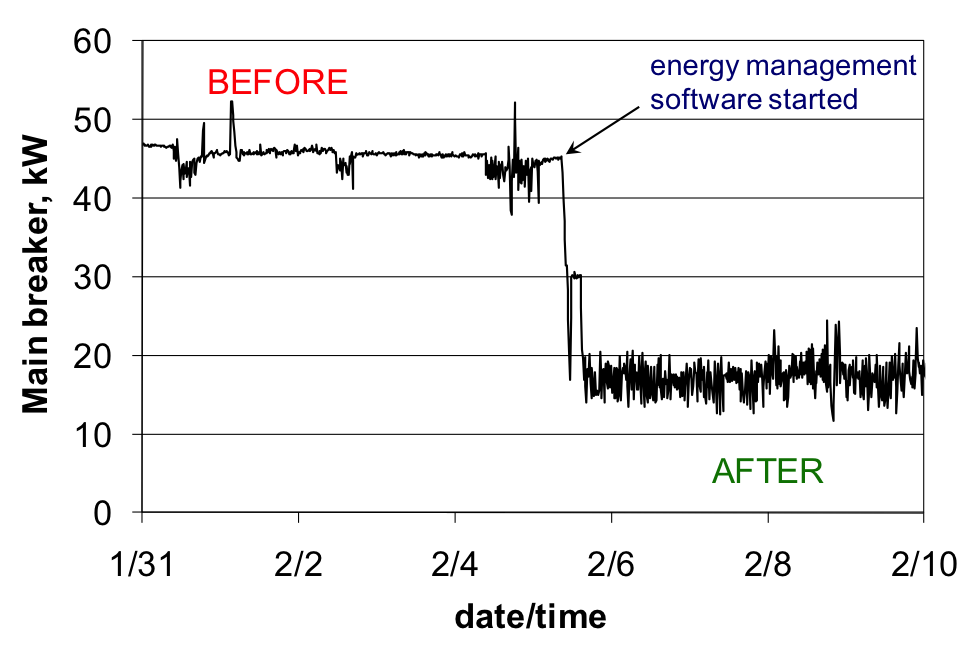

A cooling management system can establish a colder profile at the bottom of the rack and make sure that each air conditioner is actually having a cooling effect, versus working ineffectively and actually increasing heat through its operation. An intelligent cooling energy management system dynamically right-sizes air conditioning unit capacity loads, coordinating their combined operation so that all the units deliver cool air and don’t mix hot return air from some units with cold air from other units. This unit-by-unit but combined coordination squeezes the maximum efficiency out of all available units so that, even at full load, inefficiency due to mixing is avoided and significant capacity-improving benefits are gained.

Consider this example. At one company, their 40,000 sq. foot data center appeared to be out of cooling capacity. After deploying an intelligent energy management system, not only did energy usage drop, but the company was able to increase its data center IT load by 40% without adding additional air conditioners and, in fact, after de-commissioning two existing units. As well, the energy management system maintained proper, desired inlet air temperatures under this higher load condition.

Consider going smarter before moving to an additional equipment purchase decision. Savings become even larger if you consider avoided maintenance costs for new equipment, and energy reduction through more efficiently balanced capacity loads, year-over-year.