Capacity management involves decisions about space, power, and cooling.

Space is the easiest. You can assess it by inspection.

Power is also fairly easy. The capacity of a circuit is knowable. It never changes. The load on a circuit is easy to measure.

Cooling is the hardest. The capacity of cooling equipment changes with time. Capacity depends on how the equipment is operated, and it degrades over time. Even harder is the fact that cooling is distributed. Heat and air follow the paths of least resistance and don’t always go where you would expect. For these reasons and more, mission-critical facilities are designed for and built with far more cooling capacity than they need. And yet many operators add even more cooling each time there is a move, add, or change to IT equipment, because that’s been a safer bet than guessing wrong.

Here is a situation we frequently observe:

Operations will receive frequent requests to add or change IT loads as a normal course of business. In large or multi-site facilities, these requests may occur daily. Let’s say that operations receives a request to add 50 kW to a particular room. Operations will typically add 70 kW of new cooling.

This provisioning is calculated assuming a full load for each server, with the full load being determined from server nameplate data. In reality, it’s highly unlikely that all cabinets in a room will be fully loaded, and it is equally unlikely that the server will ever require its nameplate power. And remember, the room was originally designed with excess cooling capacity. When you add even more cooling to these rooms, you have escalated over-provisioning. Capital and energy are wasted.

We find that cooling utilization is typically 35 to 40%, which leaves plenty of excess capacity for IT equipment expansions. We also find that in 5-10% of situations, equipment performance and capacity has degraded to the point where cooling redundancy is compromised. In these cases, maintenance becomes difficult and there is a greater risk of IT failure due to a thermal event. So, it’s important to know how a room is running before adding cooling. But it isn’t always easy to tell if cooling units are not performing as designed and specified.

How can operations managers make more cost effective – and safe – planning decisions? Analytics.

Analytics using real-time data provides managers with the insight to determine whether or not cooling infrastructure can handle a change or expansion to IT equipment, and to manage these changes while minimizing risk. Specifically, analytics can quantify actual cooling capacity, expose equipment degradation, and reveal where there is more or less cooling reserve in a room for optimal placement of physical and virtual IT assets.

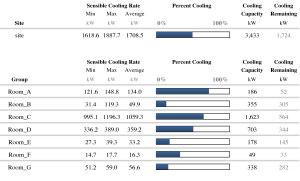

Consider the following analytics-driven capacity report. Continually updated by a sensor network, the report clearly displays exactly where capacity is available and where it is not. With this data alone, you can determine where capacity exists and where you can safely and immediately add capacity with no CapEx investment. And, in those situations where you do need to add additional cooling, it will predict with high confidence what you need. (click on the image for a full-size version)

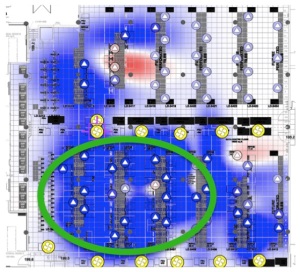

Yet you can go deeper still. By pairing the capacity report with a cooling reserve map (below), you can determine where you can safely place additional load in the desired room. You can also see where you should locate your most critical assets and, when you need that new air conditioner, and where you should place it.

(click on the image for a full size version)

Using these reports, operations can:

- avoid the CapEx cost of more cooling every time IT equipment is added;

- avoid the risk of cooling construction in production data rooms when it is often not needed;

- avoid the delayed time to revenue from adding cooling to a facility that doesn’t need it.

In addition, analytics used in this way avoids unnecessary energy and maintenance OpEx costs.

Stop guessing and start practicing the art of avoidance with analytics.