Ten Tips For Cooling Your Data Center

Even as data centers grow in size and complexity, there are still relatively simple and straightforward ways to reduce data center energy costs. And, if you are looking at an overall energy cost reduction plan, it makes sense to start with cooling costs as they likely comprise at least 50% of your data center energy spend. Start with the assumption that your data center is over-cooled and consider the following:

Turn Off Redundant Cooling Units. You know you have them, figure out which are truly unnecessary and turn them off. Of course, this can be tricky. See my previous blog on Data Center Energy Savings.

Raise Your Temperature Setting. You can stay within ASHRAE limits and likely raise the temperature a degree or two.

Turn Off Your Humidity Controls. Unless you really need them, and most data centers do not.

Use Variable Speed Drives but don’t run them all at 100% (which ruins their purpose). These are one of the biggest energy efficiency drives in a data center.

Use Plug Fans for CRAH Units. They have twice the efficiency and they distribute air more effectively.

Use Economizers. Take advantage of outside air when you can.

Use An Automated Cooling Management System. Remove the guesswork.

Use Hot and Cold Aisle Arrangements. Don’t blow hot exhaust air from some servers into the inlets of other servers.

Use Containment. Reduce air mixing within a single space.

Remove Obstructions. This sounds simple, but a poorly placed cart can create a hot spot. Check every day.

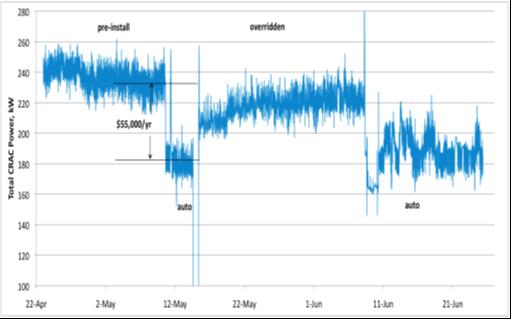

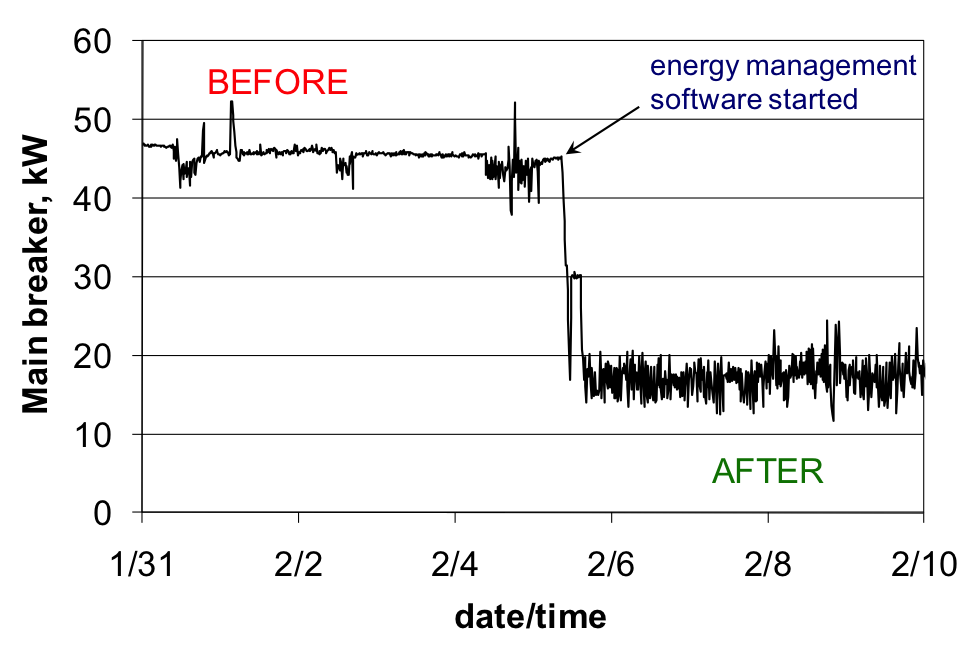

Here’s an example of the effect use of an automated cooling management system can provide.

The first section shows a benchmark of the data center energy consumption prior to automated cooling. The second section shows energy consumption after the automated cooling system was turned on. The third section shows consumption when the system was turned off and manual control was resumed, and the fourth section shows consumption with fully automated control. Notice that energy savings during manual control were nearly completely eroded in less than a month, but resumed immediately after resuming automatic control.