To date, Vigilent has saved more than 1 billion kilowatt hours of energy, delivering $100 million in savings to our customers. This also means we reduced the amount of CO2 released into the atmosphere by over 700,000 metric tons, equivalent to not acquiring and burning almost 4000 railcars of coal. This matters because climate change is real.

To date, Vigilent has saved more than 1 billion kilowatt hours of energy, delivering $100 million in savings to our customers. This also means we reduced the amount of CO2 released into the atmosphere by over 700,000 metric tons, equivalent to not acquiring and burning almost 4000 railcars of coal. This matters because climate change is real.

Earlier this year, Vigilent announced its support for the Low-Carbon USA initiative, a consortium of leading businesses across the United States that support the Paris Climate Accord with the goal of reducing global temperature rise to well below 2 degrees Celsius. Conservation plays its part, but innovation driving efficiency and renewable power creation will make the real difference. Vigilent and its employees are fiercely proud to be making a tangible difference every day with the work that we do.

Beyond this remarkable energy savings milestone, I am very proud of the market recognition Vigilent achieved this year. Bloomberg recognized Vigilent as a “New Energy Pioneer.” Fierce Innovation named Vigilent the Best in Show: Green Application & Data Centers (telecom category.)

Of equal significance, Vigilent has become broadly recognized as a leader in the emerging field of industrial IoT. With our early start in this industry, integrating sensors and machine learning for measurable advantage long before they ever became a “thing,” Vigilent has demonstrated significant market traction with concrete results. The industry has recognized Vigilent’s IoT achievements with the following awards this year:

TiE50 Top Startup: IoT

IoT Innovator Best Product: Commercial and Industrial Software

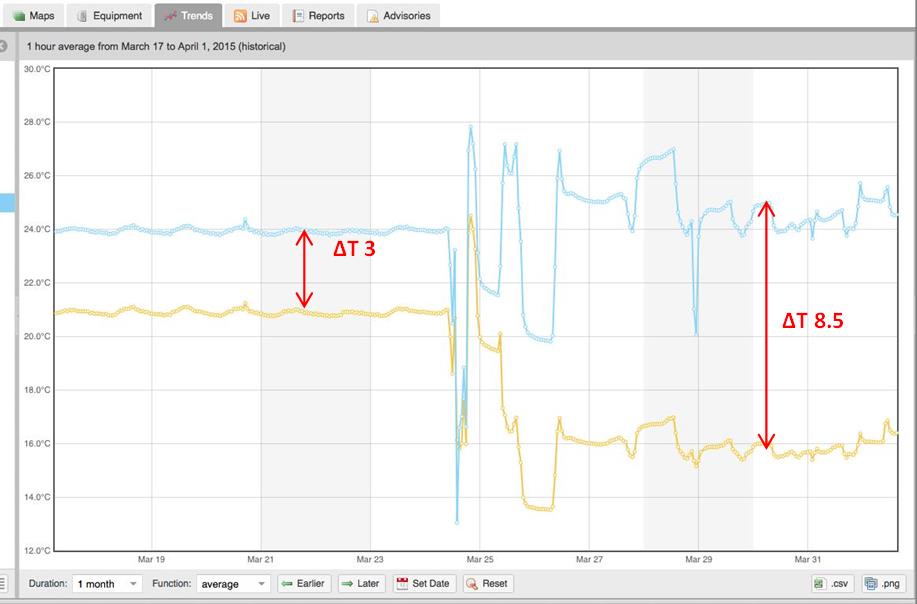

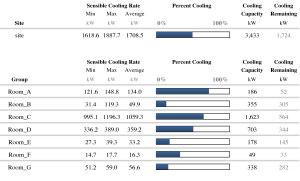

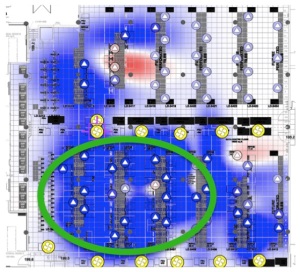

We introduced Vigilent prescriptive analytics this summer with shocking results, and I say that in a good way. Our customers have uniformly received insights that surprised them. These insights have ranged from unrealized capacity to failing equipment in critical areas. The analytics are also helping customers meet SLA requirements with virtually no extra work and to identify areas ranging out of compliance, enabling facility operators to quickly resolve issues as soon as a temperature goes beyond a specified threshold.

Vigilent dynamic cooling management systems are actively used in the world’s largest colos and telcos, and in Fortune 500 companies spanning the globe. We have expanded relationships with long-term partners’ NTT Facilities and Schneider Electric, who have introduced Vigilent to new regions such as Latin America and Greater Asia. We signed a North America-focused partnership with Siemens, which leverages Siemens Demand Flow and the Vigilent system to optimize efficiency and manage data center challenges across the white space and chiller plant. We are very pleased that the world’s leading data center infrastructure and service vendors have chosen to include Vigilent in their solution portfolio.

We thank you, our friends, customers and partners, for your continued support and look forward to another breakout year as we help the businesses of the world manage energy use intelligently and combat climate change.